-

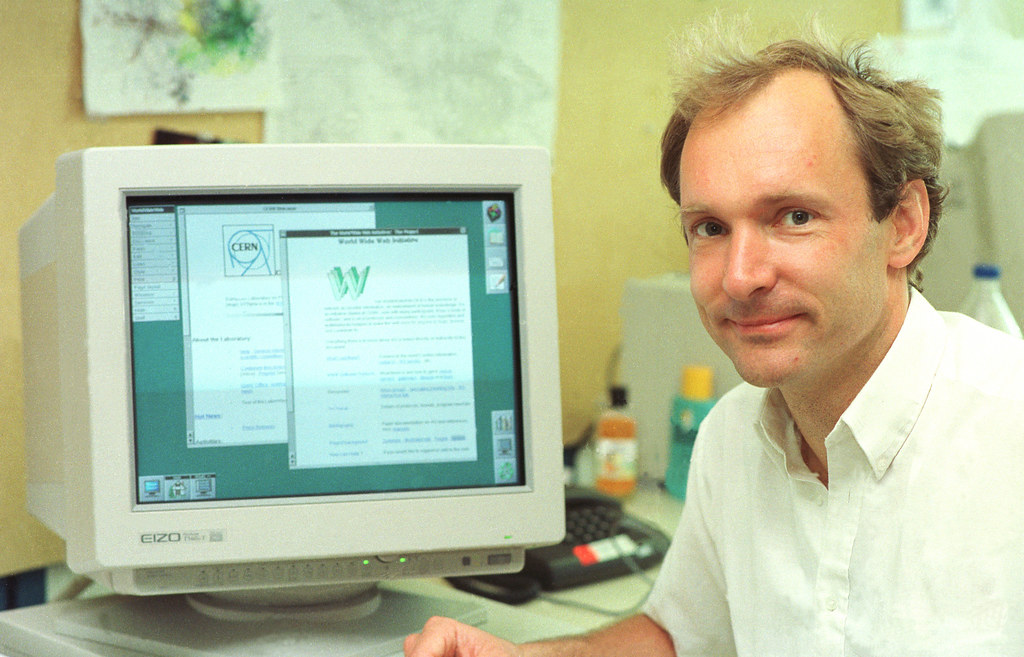

Book review: Tim Berners-Lee, This Is for Everyone

There is a particular kind of reading experience that feels less like consuming a book and more like walking alongside someone who helped shape the world you grew up in. Tim Berners-Lee’s memoir This Is for Everyone: The Unfinished Story of the World Wide Web, written with journalist Stephen Witt and published by Macmillan in September…

-

The Quiz and AI: Reconsidering what outcomes really matter

The emergence of generative AI has sparked ‘wicked problem’ questions among educators: What is a quiz for? Is it still useful? Measuring what students know through quizzing them suddenly feels inadequate when an AI system can answer factual (and deliberative) questions faster and more accurately than a human. However, beneath this dilemma sits something more interesting:…

-

Digital Archipelagos: A personal reflection on DHA2025 and its evolution in Australasia

Genesis, definitions, and memory Digital humanities remains a contested term, as all definitions must be! The field has been variously described as the intersection of computing and humanities disciplines, a methodological commons, and a site of computational engagement with cultural materials. Such contestation is healthy, signalling vitality, yet it also generates considerable waffle. I am…

-

Source to Sea: tracking the Snowy River from Kosciuszko to Marlo

Australia’s Snowy River springs from the alpine snowmelt on Mt Kosciuszko, carving a 352-kilometre route through gorges, plains and forests before meeting Bass Strait at Marlo, Victoria. Our multi-day journey followed this legendary waterway, tracing its heartbeat. From Gippsland dairy flats to Kosciuszko’s rocky headwaters, every bend revealed a version of the Snowy, wild and…

-

The Future of the Essay in the Age of AI: A Practical Guide

Generative Artificial Intelligence (GenAI) has fundamentally disrupted one of higher education’s most enduring pedagogical tools: the essay. For centuries, the essay has served as both a means of learning and a method of assessment, asking students to demonstrate research skills, critical thinking, argument construction, and disciplinary knowledge through extended written work. The arrival of tools…

-

Applying the AI Assessment Scale (AIAS): A Step-by-Step Guide for auditing and updating assessment tasks

Generative Artificial Intelligence (GenAI) has created both opportunities and challenges for assessment design and academic integrity. The AI Assessment Scale (AIAS), developed by Perkins, Furze, Roe, and MacVaugh, provides a practical framework to guide educators in making purposeful, evidence-based decisions about appropriate AI use in assessments. Rather than treating AI as a threat to be…